GPU Convolution Optimization with CUDA

ECE 408: Applied Parallel Programming – Final Project (Individual)

- Tech Stack: CUDA, C++, Nsight Systems, Nsight Compute

- Dataset: Fashion MNIST

Overview

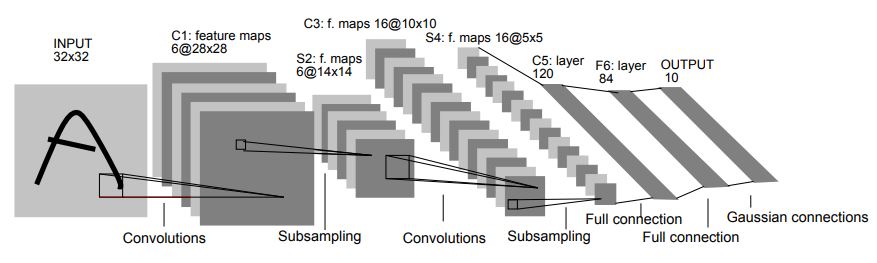

For my ECE 408 final project, I implemented and optimized the forward pass of a convolutional layer using CUDA. The goal was to improve the performance of a neural network layer used in inference for the Fashion MNIST dataset, all while maintaining accuracy and correctness. This project was completed individually and involved multiple GPU-level performance enhancements.

Optimization Focus

I experimented with several optimizations including FP16 precision arithmetic, shared and constant memory usage, stream-based concurrency, loop unrolling, and block size tuning. These optimizations were benchmarked using NVIDIA's Nsight Systems and Nsight Compute to assess their impact on kernel performance and memory efficiency.

Key Outcomes

The final optimized implementation achieved a combined Op Time of approximately 31ms for a batch size of 5000—meeting course targets for high-performance inference. Accuracy remained stable at around 0.871. Some optimizations improved SM throughput and reduced global memory bottlenecks, while others, like streams, introduced tradeoffs in synchronization overhead.

What I Learned

- Low-Precision Arithmetic (FP16): Explored half-precision tradeoffs, including memory savings and conversion overhead.

- Memory Hierarchy Optimization: Used shared and constant memory to reduce global memory access latency.

- Stream-Based Parallelism: Learned how to overlap memory transfers and computation using CUDA streams.

- Loop Unrolling & restrict: Improved inner-loop efficiency and allowed better compiler-level optimization.

- Profiling-Driven Development: Applied Nsight Systems and Nsight Compute to identify bottlenecks and tune performance.

Conclusion

This project gave me hands-on experience applying GPU programming techniques to a real-world deep learning workload. Beyond coding, I gained a deeper understanding of CUDA performance profiling, memory management, and kernel optimization strategies.